Pathtracer

- Category: Graphics

- Project URL: https://github.com/Hanszhang12/Pathtracer

Project Details

In this project, we worked on various aspects of the ray-tracing pipeline and implemented efficient shading of surfaces and objects. We began this endeavor by working according to the first principle of ray-tracing, generating rays and their intersections with objects. We used the bounding volume hierarchy data structure (BVH) in order to necessarily speed up long tests. We then implemented calculations for direct illumination (zero bounce and one bounce) based on physical rules of light and our ray implementations. We complemented these calculations with their counterpart: implementations for indirect illumination which procured seamless images using global illumination principles. Lastly, we performed an optimization called “adaptive sampling” in order to short circuit sampling once pixels have converged to an appropriate level.

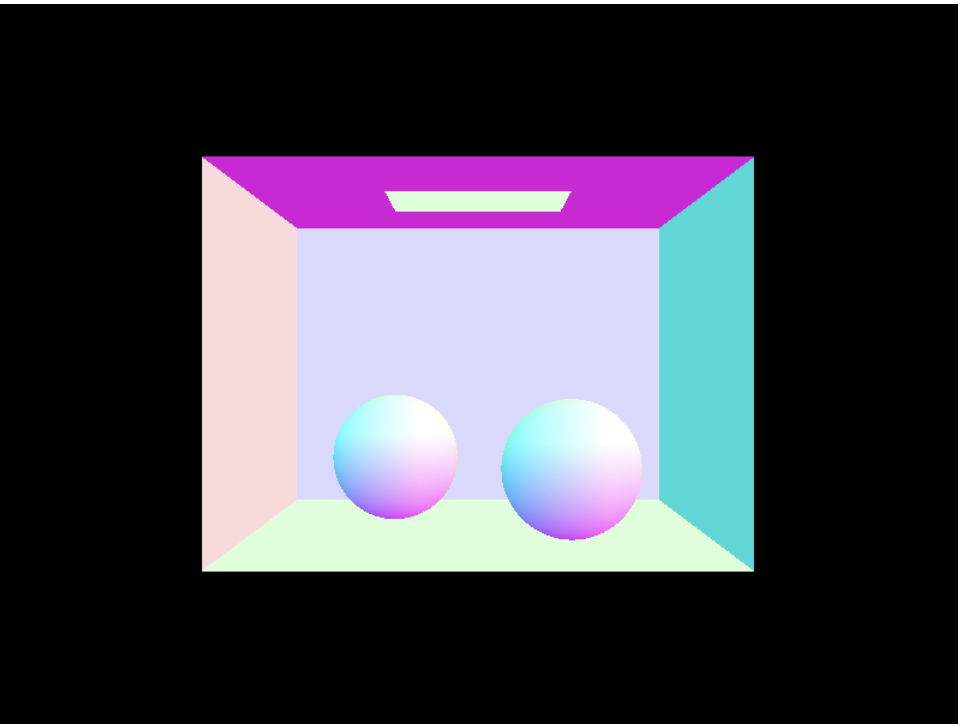

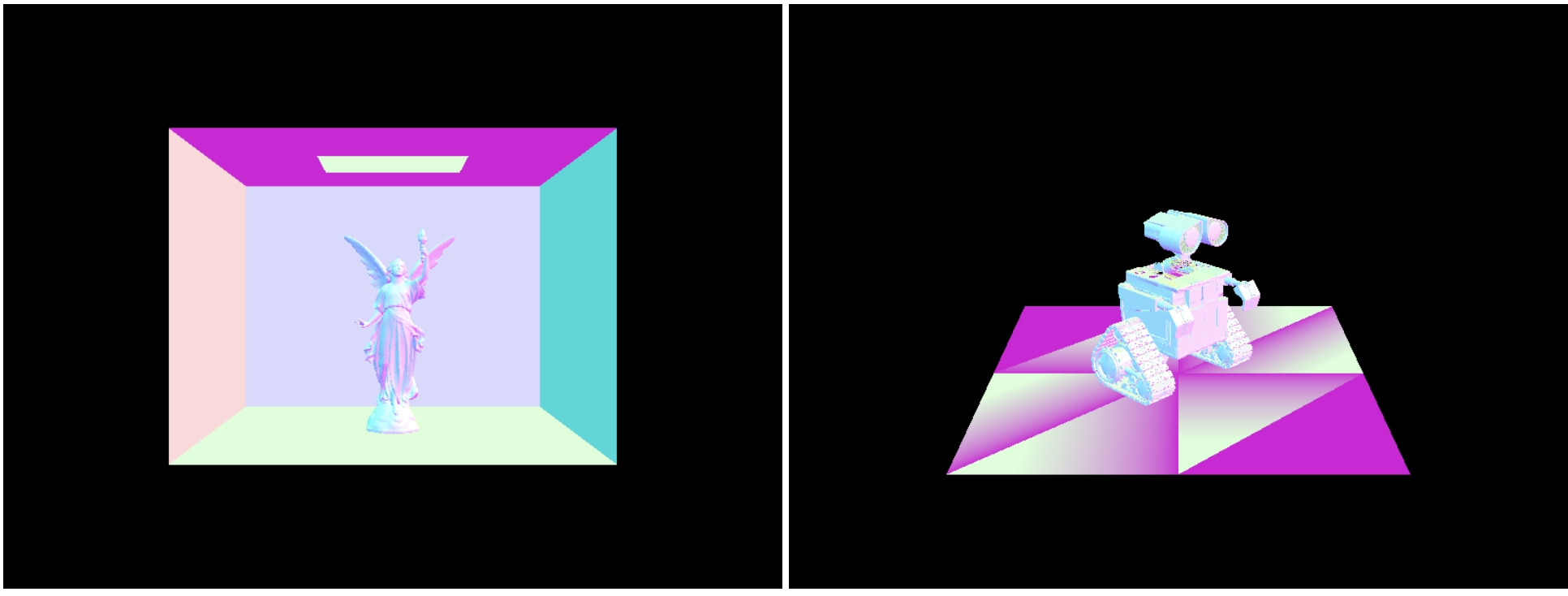

Ray Generation and Intersection

Our ray generation algorithm consists of two steps. First, we converted the input world image coordinates to camera coordinates by using the transformation (tan(hFov/2), tan(vFov/2), -1). Secondly, we converted the transformed coordinates into world space and then normalized them. In order to generate pixel samples, we had to change the underlying coordinate system from the world space to the image space by dividing the given (x, y) coordinates by the width and height of the image space respectively. Keeping in mind that the origin of the coordinate space is the bottom left corner, we iterated over the number of samples and used the gridSampler object to attain new sample points to pass into the generate ray function. We then used these points to update our sample and sampleCount buffers. In order to test for triangle intersection, we manipulated the 3D triangles’ normal and area properties to compute barycentric coordinates to then test if a point evaluated at time t is inside a given triangle. Using the cross product of the differences between the triangle’s vertices, we computed the normal of the triangle which we then used to compute the intersection time t from the ray equation (p^’ - o) (dot)N / (d (dot) N). We had to verify that this intersection time t lay in the appropriate interval (min_t, max_t). We finally derived the barycentric coordinates using proportions of the triangles’ areas which are equal to the squared norm of the corresponding normal vectors. Finally, we verified that the resulting coefficients were between 0 and 1 and then updated the arguments for isect with the discovered information. Sphere intersection tests are extremely similar with the caveat that intersection tests for a given point may be computed by using the ellipsoid equation (p-c)^2 <= r^2. And so the intersection values t_min and t_max simply become the roots of the final quadratic equation.

Bounding Volume Hierarchy

The first thing I needed to do was get the number of primitives and the size of the BBox. I did this by using a for loop that expanded the current BBox and also added to the primitive count. We also had to set the start and end pointers to be the ones passed into the function. Afterwards, I check to see if the primitive count is less than the max leaf size. If it is, we set the left and right nodes of the main node to be NULL. If it wasn’t, we would need to split up start and end into two branches where we would recursively call construct_bvh. Originally, I wanted to split it up based on the middle centroid of the longest axis. However, I was running into too many segfault errors so I decided to just split it by the median primitive. The cow took around 40 seconds to run without BVH acceleration and less than a second with BVH acceleration. The more complex scenes such as the maxplanck would take a very long time to render but can render in a matter of a few seconds using BVH. BVH acceleration is much faster than the original construction because it runs in log(N) time as opposed to (N) time. It recursively splits up the tree in two at each level, making it run in log time.

Direct Illumination

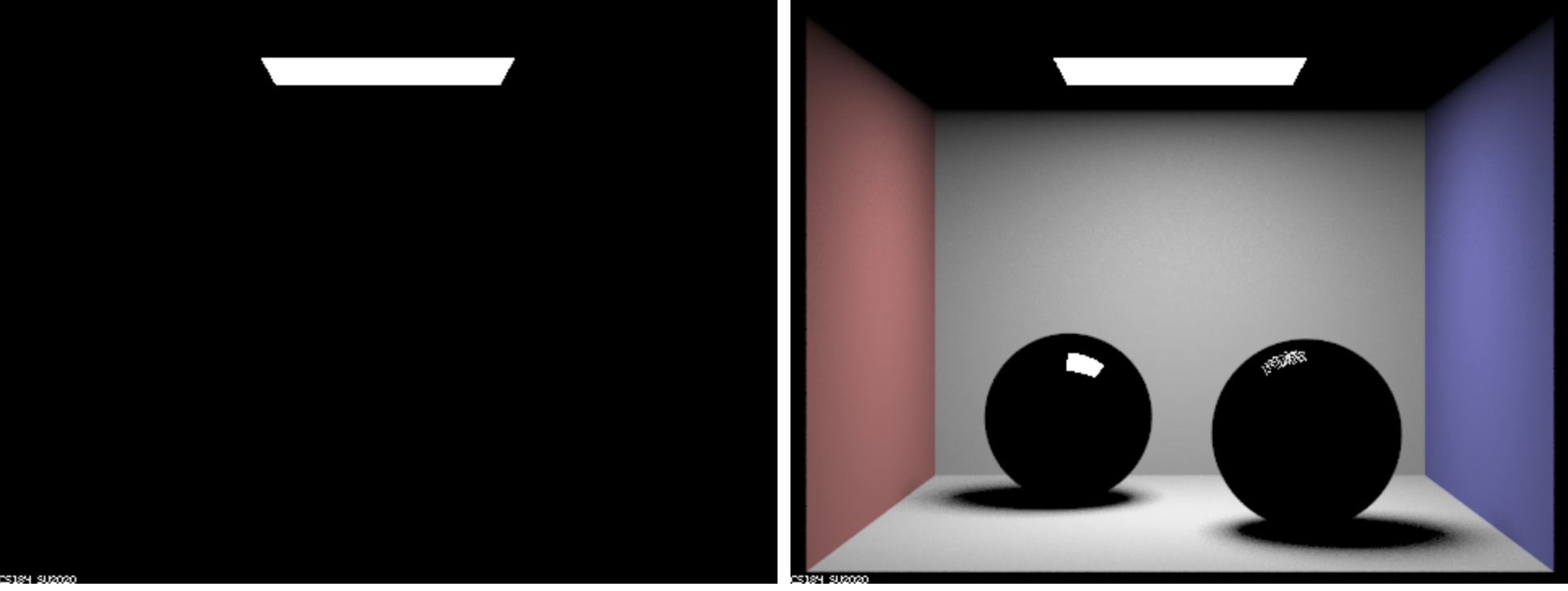

For the implementation of the f function, we simply returned reflectance/pi due to uniformity. The returned zero bound illumination was just the BSDF output for an intersection. For direct lighting with uniform hemisphere sampling, the uniform hemisphere concept implies a pdf of 1/2pi, which is the pdf we then used. We then iterated over num_samples and for each iteration we sampled a ray direction and converted it to the world basis so that we would have the ray in the correct spatial point. Using the radiance equation from lecture, we then summed up the radiances per iteration and divided this summed value by num_samples for the final integral value. For direct lighting by importance sampling lights, we looped over all ScenceLight objects and generated rays in the same way as before for ns_area_light samples per SceneLight object. The difference between the approach here and that of uniform hemisphere sampling is that we project the angle in an opposite direction, have an arbitrary pdf rather than a uniform one, and keep track of the distance to the light at a given point in time from which we then set the parameter max_t appropriately. We then check for an intersection, use the reflectance equation to evaluate radiance, and then normalize the light value by the number of samples for that iteration. Lastly, we return the summed value of all the normalized radiances. The benefit of importance sampling is that it uses useful information which allows it to converge with less noise and less ray usage. The uniform approach casts rays in random directions, which leads to a larger amount of noise and “misfires” or uninformative casts.

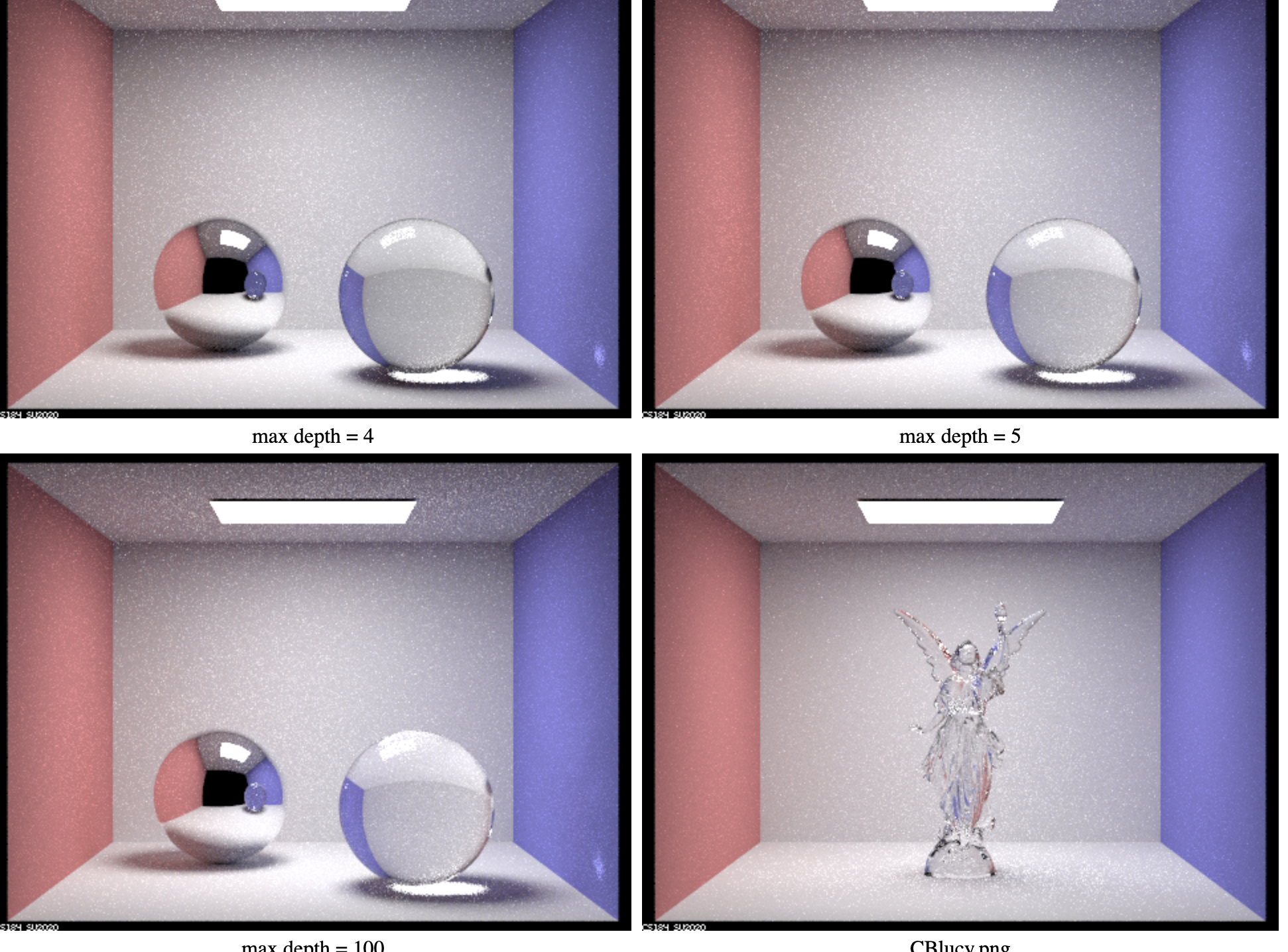

Mirror and Glass Materials

For the image corresponding to m = 0, we just see the light at the top. This is due to the fact that since there’s no bounce, nothing in the image is illuminated. For m = 1, we can make out the spheres but they remain black because the max ray depth is only one. This means that the limited max ray depth prevents the light from bouncing off objects. For m = 2, we can see the mirror sphere but the glass sphere still remains unclear because the bounce number is still too low to pass through the glass sphere. For m = 3, we can now see the glass sphere clearly. However, the reflection of the glass sphere in the mirror sphere is still a bit unclear. We can also see the light passing through the glass sphere. For m = 4, we can see the glass sphere clearly in the mirror sphere. We can also see some of the light refract on the right wall. Max ray depth 5 and 100 are pretty similar but a bit brighter than the previous images.

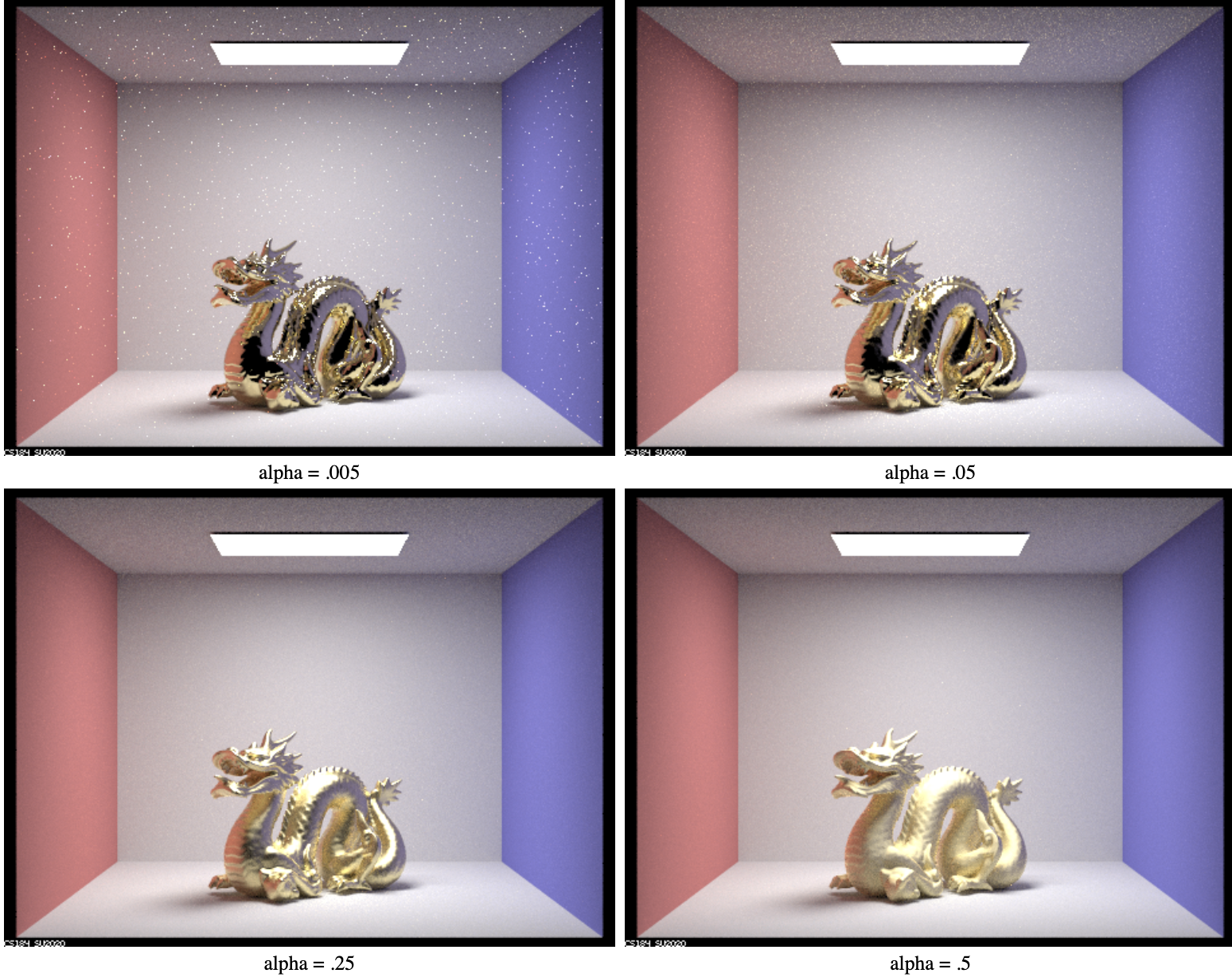

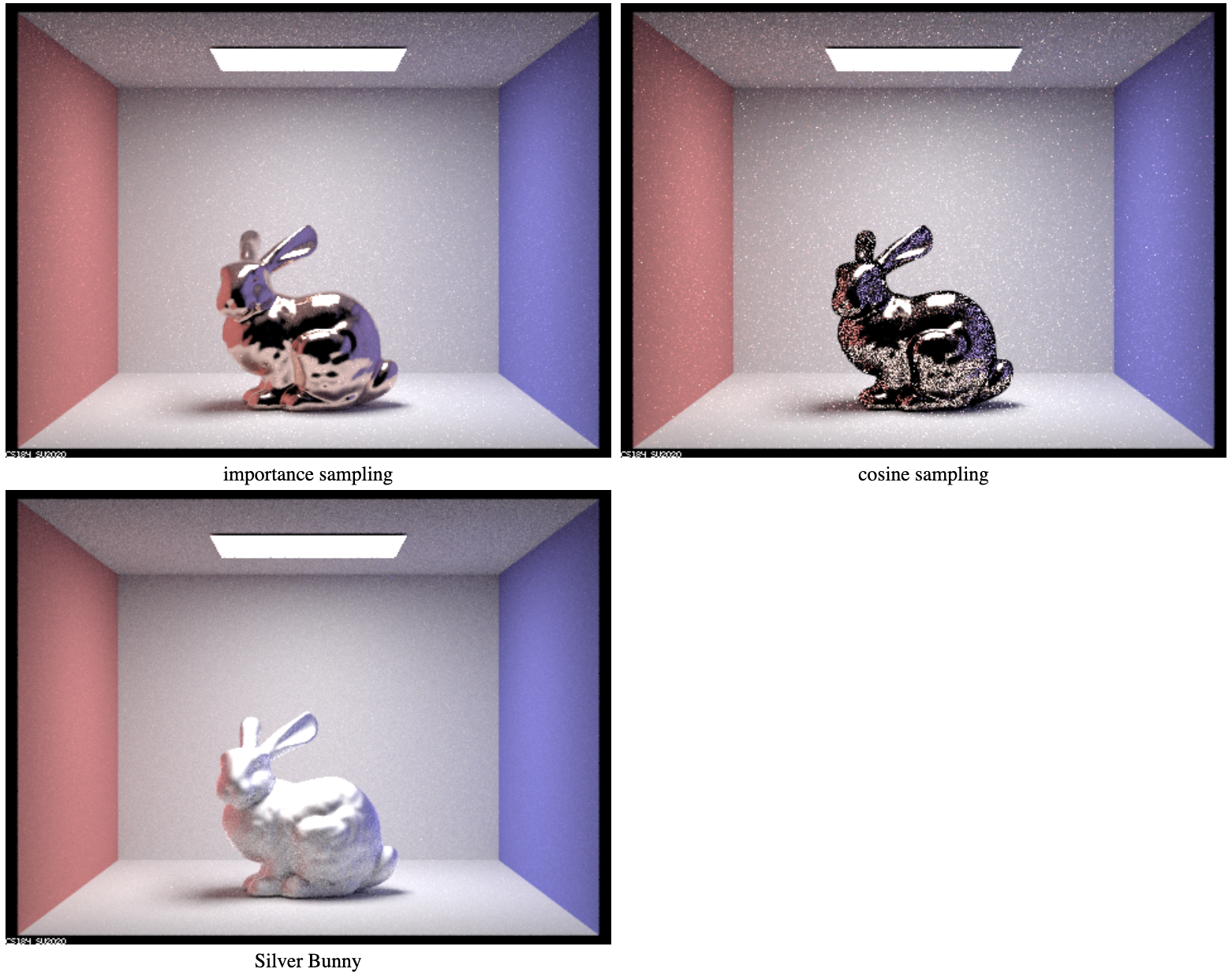

Microfacet Material

A decreased alpha value pertains to a more mirror-like and smooth appearance, while larger alpha values correspond to a brighter yet less mirror-like and more opaque image. The bunny rendered with importance sampling clearly has smoother edges and contours that provide the bunny with a more realistic 3D shape and color. Furthermore, the lighting of the importance-sampling bunny is more realistic given the scene at hand and the light above the bunny. The cosine sampling bunny has a strange dark aura surrounding it and has a less smooth surface in contrast to the former. In order to render a silver bunny, I used the eta parameters: (0.0592, 0.0599, 0.0474) and the k parameters: (4.1283, 3.5892, 2.8132) which I figured out by googling how to represent silver surfaces. As shown in the image, the bunny appears less mirror-like and has a more silvery sheen to it.